Applied AI Bites 🌮 #4

AI Agents are coming 🤖🤖🤖🤖🤖🤖

Hey folks! Sorry for the silence lately - but trust me, this one's worth the wait! Coming up: how we are moving away from simple chatbots towards AI agents and what's cooking at Anthropic (you know, the company behind Claude and other top-tier AI models, right up there with OpenAI). We've got inside info straight from their CEO on where they're headed, both now and down the road.

Agenda

Agents everywhere. How did we get here?

What is Anthropic betting on for the Future?

Agents, Agents, Agents everywhere. How did we get here?

Agents are the new frontier of AI. They've become the framework that practitioners and startups use to market themselves. But is this concept really new? Yes and No.

Let's briefly follow the history of Agents starting from year zero: the release of ChatGPT in November 2022.

1st Phase: LLMs are AGI

From December 2022 to March 2023, agents weren't really a topic. People were still trying to understand what to do with LLMs, and there was a widespread belief that we could achieve AGI simply through new model iterations (GPT-4, 5, 6, or whatever). The main focus was on developing what was initially called "semantic search." This technique later evolved into RAG (Retrieval Augmented Generation). The early approach was very simple (now called Naive RAG) compared to more complex techniques (which we now call Agentic RAG).

2nd Phase: AutoGPT and BabyAGI

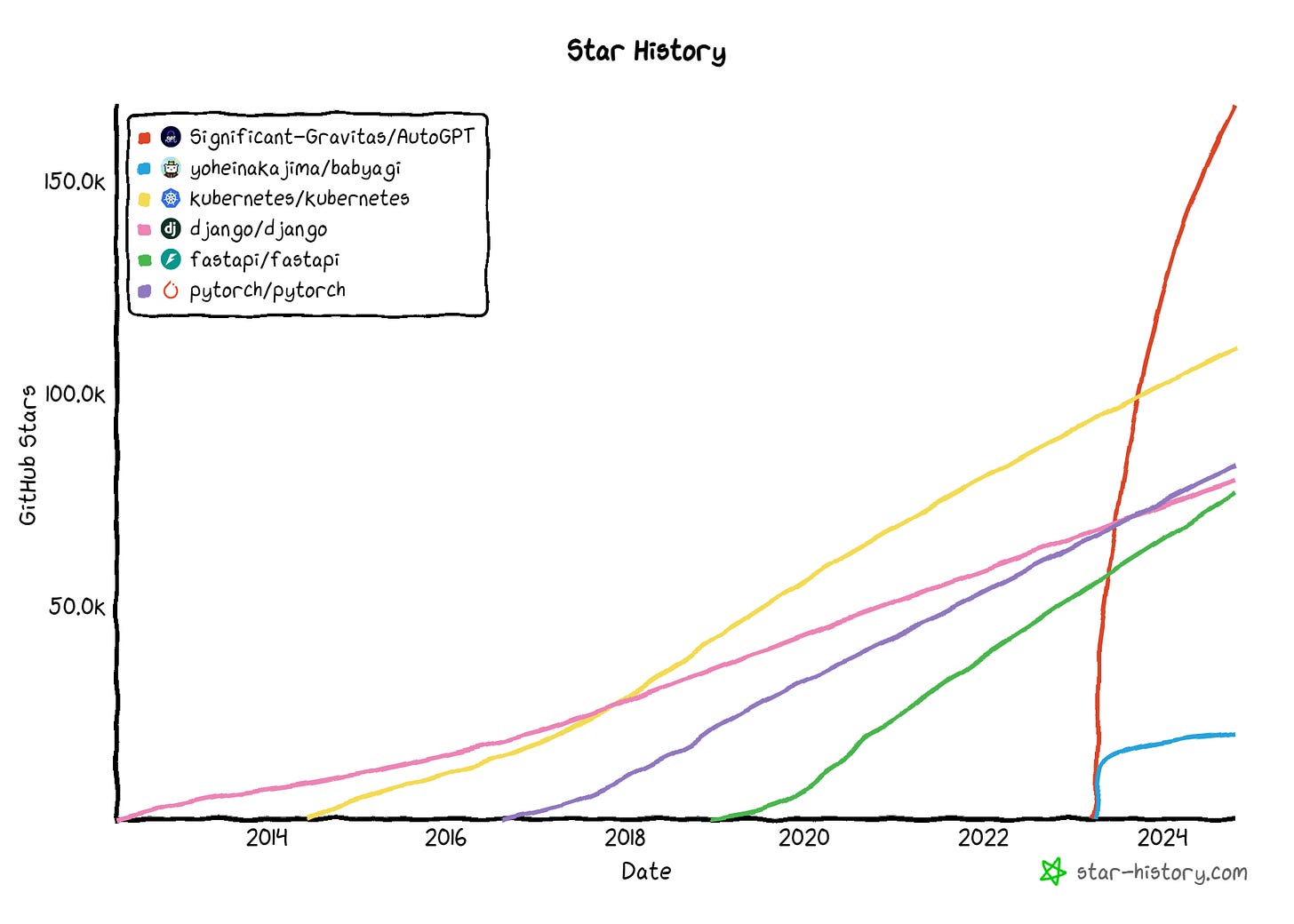

Around April 2023, it became clear that LLMs alone couldn't achieve all the cool things our imagination could envision. The term "Agents" started appearing more frequently, and projects like AutoGPT and BabyAGI became incredibly popular. And I mean REALLY popular. AutoGPT crashed any conceivable GitHub Star growth records. In the image below you can see how ridiculous its growth was compared to previous VERY SUCCESSFUL projects like Kubernetes and Django.

The main ideas revolved around:

Providing tools to LLMs (such as web browsing, email capabilities, etc.)

Implementing a planner cycle: having the LLM develop a step-by-step plan for specific queries/tasks and then execute each step

Incorporating concepts like persistence and memory (cleverly leveraged by vector database companies to raise hundreds of millions in funding)

Problem: The technology wasn't (and still isn't) ready for solving generic tasks. Without constraints and clear directions, LLMs hallucinate and make mistakes. In multi-step tasks requiring 5 or 10 steps to complete, an error in any single step cascades through the process, rendering the final output unusable.

Results: While these projects were fascinating, they remained far from production-ready applications.

3rd Phase: First Glimpses of Agentic Behaviors

Okok we got it, agents can’t do everything. Still, during 2023 functionalities like ChatGPT's Code Interpreter emerged as the closest thing we had to working agents. OpenAI also attempted to launch plugins, which seemed promising in theory but struggled with integration issues. Indeed, having integrations that both function properly and are called at the right time with the correct parameters remains one of the key challenges in developing functional AI Agents.

On the research front, papers like Voyager and MetaGPT advanced interesting new concepts and offered an early glimpse of agents' potential capabilities.

Andrej Karpathy came up with the analogy between LLMs systems (agents) and OS. The idea got very popular and many startups started using the line “We are the AI OS for X“.

4th Phase: Unbundling

In the first quarter of 2024 and people started to focus more on products that are more specific, doing one single thing well, rather than a horizontal product like ChatGPT, which also saw its growth slow down.

People are now building LLM systems, called Agents, for specific industry verticals, or tasks (for example coding with Devin and Cursor). It is still unclear what is the level of autonomy for these agents and there is a lot of research on UI/UX.

It is clear that in many cases people and agents will work together with very close feedback loops. The most known examples are artifacts in Claude and canvas in ChatGPT.

What is coming next?

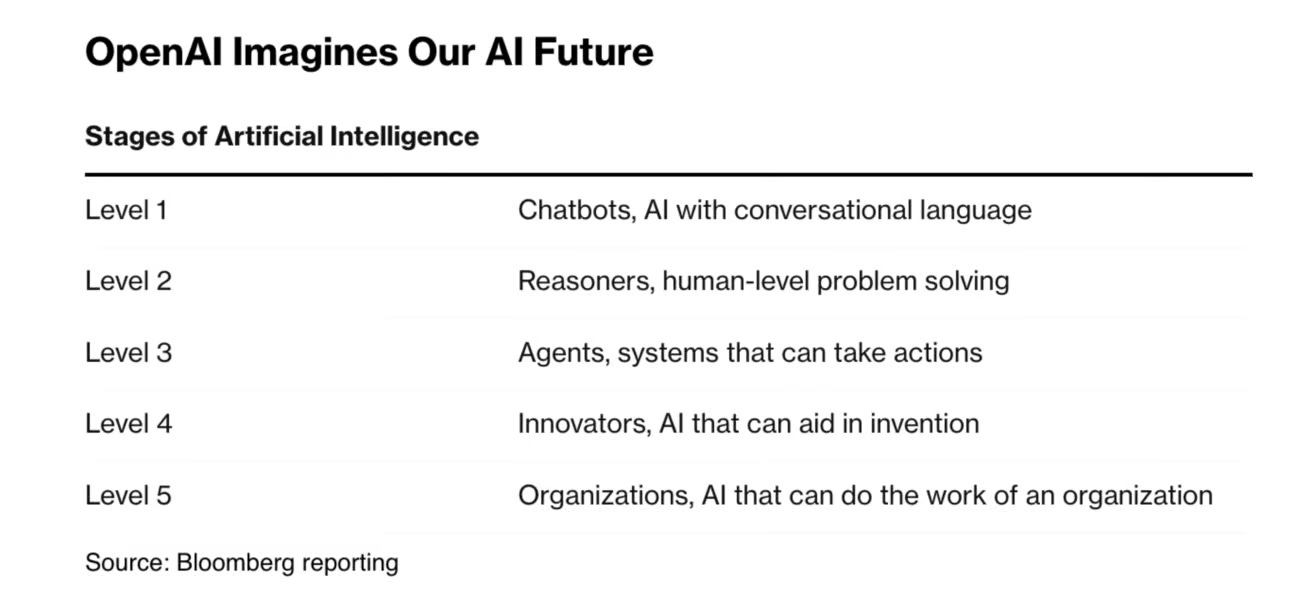

The framework below from OpenAI shows how the company thinks about the next decades.

This is a simplification of course.

We are supposedly between Level 2 and 3. The O1-preview model is supposed to be the first step in that direction (but arguably we are not totally there yet).

Startups are now trying to build the first products that could be classified as Level 3. 2025 will be about the first success stories. Level 3 could bring innovations for years, if not decades to come.

The ideas are there for everybody to grab. Code assistants, sales assistants improving the closing rate of below average salespeople, personal assistants doing the job of executive VAs, customers support, financial planners, health coaches, market researchers, etc, etc… I can go on for longer than you are willing to read (congratulations for making it so far btw).

A mixture of improvements in the base models and good engineering will lead us there. Many teams are tackling the same problems. Ideas are worth a dime and execution, as always, will be key.

Anthropic bets for the Future

I had the pleasure to participate to the 1st builder day by Anthropic with a firechat with Dario Amodei, CEO of Anthropic. Here are my takeaways:

Dario sees room for about 5 major players in the top-tier LLM space, each carving out their own niche. Anthropic just dropped Computer Use - pretty cool stuff that lets AI control your mouse cursor!

What sets them apart? While OpenAI takes safety seriously, Anthropic has built it into their DNA from day one. They're betting big that as AI goes mainstream, safety won't just be a nice-to-have - it'll be essential.

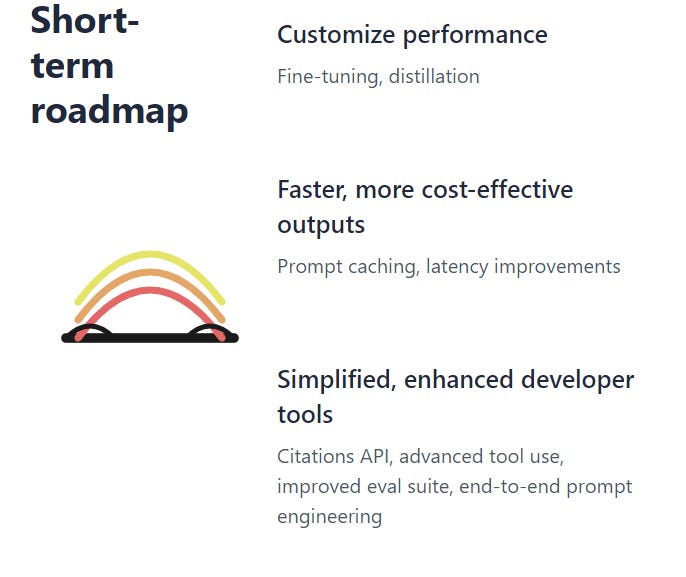

Below is the short-term roadmap for Anthropic (not the original picture. I asked Claude itself to write code to visualize the content of the crappy photo I took. The below is done in React code.)

And here is the Anthropic long-term roadmap:

Unlike OpenAI, Anthropic's main revenue stream comes from API usage, not the web chat interface. This explains why they're pouring so much energy into making developers' lives easier. Plus, they're really diving deep into model interpretability - making AI more transparent. They want to crack open the black box of how these models think. I can't wait for them to roll out tools that help us understand why AI models choose certain words/tokens over others. It'll be fascinating to peek under the hood!

Shameless plug: At Duenders we have been working with AI agents and agentic RAG for a couple of years. There is a lot of nitty-gritty to get right but if you do, the efficiency gains and workflow enhancements you get are mind-blowing. Either you want to explore use cases or you are encountering issues to bring prototypes to production, feel free to reach out!